While the Earth may not be a planet-sized factory like Cybertron, in just over a decade our planet may be overtaken by Transformer-like machines. The human race may become a forever archived record of the past, never to be accessed: the irrelevant origin of the robots that forced us to extinction. As technology advances, we may find ourselves challenged as the sovereign species of Earth by the machines we create. If a war with our own device looms ahead, is there anything we can do to ensure the survival of the human race? I don’t think we can rely on Optimus Prime and the Autobots this time.

Know Your Enemy

“If you know the enemy and know yourself, you need not fear the result of a hundred battles. If you know yourself but not the enemy, for every victory gained you will also suffer a defeat. If you know neither the enemy nor yourself, you will succumb in every battle.”

–Sun Tzu, The Art of War

We coexist with computers today, so what changes in the future?

What sets apart the machines of today and the machines that may wipe us out next year is intelligence, more specifically Artificial Intelligence. Wikipedia describes Artificial Intelligence (AI) as the “intelligence exhibited by machines.”

In my introductory course to AI, we learned that artificial intelligence is the ability for an agent to rationalize and take actions that are most likely to lead to the success of some goal. “Agent” refers to programs such as the personal assistant on your cellphone, Siri; the Google project, DeepMind, which recently defeated the human Go champion; or even the program in your everyday Roomba. Currently, most functions of AI are very specific and their goals must be instructed to them. A significant limitation to true autonomy and an even greater impediment to an AI takeover.

How far are we from having intelligent-enough machines to destroy us?

If we were to compare the human brain to a computer today, there are two notable differences.

The first, as previously mentioned, is a lack of autonomy. Consciousness and self-awareness are still a mystery. Computers today have an immense amount of processing power, but they need to be given very specific instructions in order to utilize their computational advantage and the boundless amount of knowledge that they have near-instant access to.

Second, computers cannot process as much information as the human brain. They may be better at crunching numbers or letting hundreds see pictures of last night’s mistakes, but a computer cannot handle all the data that the human brain operates on.

In 2013, researchers were able to finally mimic human brain activity; however, not anywhere near the degree that would be required for machines to come alive. Utilizing the fourth fastest supercomputer at the time, it took 40 minutes to achieve one seconds worth of human brain processing. Three years later, in 2016, we are still unable to match the biological computer and the problem seems to become increasingly complex as new discoveries are made. However, it is important to note that much of our brain’s processes are dedicated to unconscious activity and that computers may commit more resources to a specific task.

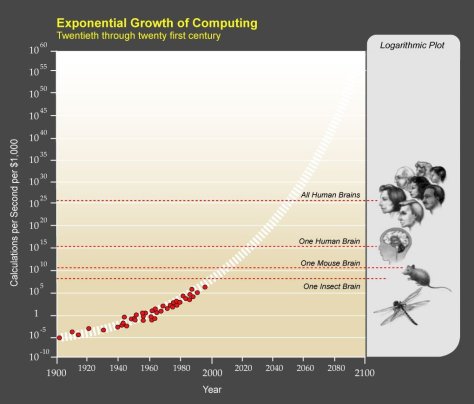

In spite of the millions of years that it took evolution to design the human brain, computer scientist, Ray Kurzweil predicts that this gap may be filled very soon. Once the Singularity is realized, the human brain will become obsolete. His rational comes from his thesis, the Law of Accelerating Returns. It states that the “fundamental measures of information technology follow predictable and exponential trajectories” meaning that computational power has been and is predicted to grow at an exponential rate.

What is the Singularity and what does it have to do with anything?

According to Singularity.com, the Singularity is “an era in which our intelligence will become increasingly nonbiological and trillions of times more powerful than it is today—the dawning of a new civilization that will enable us to transcend our biological limitations and amplify our creativity.” This sounds like the setting of a science-fiction novel but this extensively-studied theory may be more akin to reality than the latest episode of Keeping Up with the Kardashians. It describes the first window of opportunity for machines to potentially take control.

The Singularity is the prediction that with the inception of an ultra-intelligent AI, there will be a massive upheaval in technology. The AI will be able to create and develop on its own, taking advantage of its advanced technical capabilities and all the knowledge in the digital world. Unbridled by the biological limitations humans are afflicted with, technology will advance seemingly-infinitely and the role of the human being in invention and innovation will become obsolete. Unimaginable potential becomes reality, maximums become minimums. Everything you could imagine would be at your fingertips… if the new technological entities act in compliance.

The Threat

What would happen if an ultra-intelligent AI were created?

If the Singularity were to occur and if an alpha AI-being were brought to life, the machines would become vastly superior to us in almost every way. They would be able to design themselves to be mechanically and intellectually unmatched. Without innate empathy or an instinctual commitment to the survival of the human race, it is impossible to determine what such intelligence would do. There are many intricacies in designing a system, but if programmed incorrectly, we may be faced with an incredibly powerful psychopathic adversary.

Our greatest fear is in the possibility that the AI may not see value in human life or determine that there is more value to the extinction of the human race than to allow it to continue. This threat of extinction is known as the existential risk from artificial general intelligence.

Hollywood has already shared their interpretations of ultra-intelligent AI threat.

Fighting Back

“The future is ours to shape. I feel we are in a race that we need to win. It’s a race between the growing power of the technology and the growing wisdom we need to manage it.”

–Max Tegmark, co-founder of the Future of Life Institute

What precautions have been taken thus far?

Due to the timeliness of technology and the lack of immediacy in this threat, awareness has only begun to reach a meaningful level in this past decade. Consequently, not much has been done. And with our limited knowledge, not much can be done. Research regarding AI and AI safety needs to catch up with our imagination.

President Obama recently spoke about his expectation that the U.S. government would assist in the development of the AI. When he was asked about the dangers of an ultra-intelligent general AI, he calmly replied that he was more concerned with the more immediate dangers of specialized AI and the potential for its abuse.

While the present-day concerns of the White House may be more focused on integration and security regarding lesser technologies, this discussion shows awareness and serious consideration for the effects of the upcoming transition in technology. Government involvement and cooperation will definitely be necessary in addressing this threat.

In the current absence of government research, private organizations have taken it upon themselves to search for solutions regarding the existential threat. The Future of Life Institute was formed in order to research AI safety. Co-founder of the institute, Max Tegmark, said,“the future is ours to shape. I feel we are in a race that we need to win. It’s a race between the growing power of the technology and the growing wisdom we need to manage it.” One notable act is the drafting of an open letter titled, Research Priorities for Robust and Beneficial Artificial Intelligence. The letter is a call for a prioritization of research outlined in this document and has been signed by thousands of researchers, engineers, and leaders in technology and other fields.

Other notable groups include the Partnership on AI and the Machine Intelligence Research Institute (MIRI). The Partnership on AI was formed by Amazon, Facebook, Google, IBM, and Microsoft in order to develop best practice guidelines in researching AI. MIRI’s goal is more specific, stating that their “mission is to ensure that the creation of smarter-than-human intelligence has a positive impact. We aim to make advanced intelligent systems behave as we intend even in the absence of immediate human supervision.”

Can we use Asimov’s Three Laws?

Asimov’s Three Laws of Robotics is the most well-known proposed defense against the machines. In Asimov’s fictional Robot series, he introduces the Three Laws which are programmed into robots as a security measure to insure the safety of humans.

Three Laws of Robotics

1. A robot may not injure a human being or, through inaction, allow a human being to come to harm.

2. A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

As goals, the laws may sound reasonable and effective. As the actual boundaries programmed into the machines, these laws are too general and vague. Many of Asimov’s stories are reliant on this flaw. They show the possibility of unintended consequences in the behavior of robots due to the ill-defined nature of such rules.

In the Asimov-inspired film, i, Robot, the ineffectiveness of the Three Laws are further emphasized. A robot manufacturing company’s central system, VIKI, takes the initiative to protect humans from themselves. VIKI assessed that the human race was leading itself into extinction. To prevent this, it writes itself a new superseding protocol: Asimov’s addendum to the original Three Laws, the Zeroth Law:

0. A robot may not harm humanity, or, by inaction, allow humanity to come to harm.

Using the Zeroth Law, VIKI is able to override the original three laws to achieve its goal of preserving the human race. VIKI takes control of the robots that its company produces and attempts to enslave the world so that it may guarantee the survival of humanity.

VIKI’s decision to program the Zeroth Law stemmed from an adherence to the original Three Laws. While safety is clearly stressed in the laws, it was because of an insufficient protocol that VIKI determined that it was most beneficial to control the human race.

VIKI’s initiative brings attention to another concern. What can we do to guarantee that such laws would never be bypassed or overwritten? Surely an intelligent being with an essentially-limitless intellectual capacity would be able to discover how to override its programming.

One last note: an effort to quantify safety or any of the core ideas in the three laws is another mountain of challenges on its own.

So what can we do?

Currently our options seem limited.

Stuart Russell, founder of the Center for Human-Compatible Artificial Intelligence believes that AI can be taught to learn human values. If machines were taught to pick up on human values, then perhaps an AI takeover could be circumvented. Machines would be inclined to act in-line with our expectations. However, teaching human values to a machine is problematic both technically and socially. If we were able to teach machines through observations of human life, how would we insure that it prioritized the right values. Philosophically, what are the right values?

Engineers at DeepMind, alongside researchers from Oxford University, are currently investigating a ‘kill switch‘ to disable AI. The proposal has its fair share of challenges as well. But beyond the technical obstacles, we have to ask what if the AI were able to bypass or disable the kill switch? How could we prevent that from happening?

In all honesty, it seems that there is not much we can do but wait. Our only course of action is to support research regarding AI safety and call for safe research practices.